Context - AI In Ecommerce.

Information and communication technologies, machine learning, digitalization, robotics, and artificial intelligence, according to researchers, will fuel the fourth industrial revolution (AI).

Decisions will be made by machines, which will have a significant influence on corporate marketing strategies and society (Syam and Sharma 2018; Dwivedi et al. 2019).

The AI revolution will have a larger effect than the industrial and digital revolutions combined in the next 20 years (Makridakis 2017).

According to studies, the advent of intelligent goods and services is not only a fad, but they have the potential to revolutionize the world (Sonia et al. 2020).

Researchers are confident in AI's origins, which have previously been through two "hype cycles," namely the first in 1950–1983, a revival in 1983–2010, and the second in 2011–2017.

Artificial intelligence (AI) will now be the future of brains, minds, and machines (2018–2035). (Aggarwal et al., 2018; Simon et al., 2019).

According to Frey and Osborne (2017), AI will have a big influence on sales, marketing, and customer service, and 47 percent of US occupations might be automated by 2033.

Experts expect that AI will have a significant impact on three industries: retail, education, and health care (Ostrom et al. 2018).

With a large share of human effort and poor profit margins, the retail business, particularly e-commerce, is a perfect match for AI applications (Weber and Schütte 2019).

AI is often used by e-commerce companies to personalize webpages and provide product suggestions (Netflix).

Amazon.com and other tech and retail giants are aggressively investing in research and development to advance AI applications such as Alexa, voice-powered assistants (Echo), and the Prime Air drone effort, among others.

In reality, Amazon's cloud platform (AWS) provides AI and machine learning capabilities to other businesses (Weber and Schütte 2019).

This paper will discuss AI and its applications in different business processes involved in an e-commerce firm to better understand the function and value of AI in e-commerce.

But, before we begin, we must first grasp the notion of AI, which is covered in depth in the next section.

WHAT IS ARTIFICIAL INTELLIGENCE AS APPLIED TO E-COMMERCE?

Before considering AI, it's important to define "intelligence," which is defined as a person's capacity to learn, comprehend, or cope with new circumstances; think abstractly; and influence one's environment using knowledge (Merriam-Webster.com 2020).

Intelligence is defined as the ability to acquire and apply memory, knowledge, experience, reasoning, imagination, judgment, opinions, facts, skills, calculations, information, and language in order to calculate, classify, generalize, and perceive relationships; solve problems; plan and think abstractly; comprehend complex ideas; learn quickly; overcome obstacles; and adapt efficiently to new situations, either by changing oneself or by changing one's environment (Legg and Hutter 2006; Paschen et al. 2019).

How Does AI Mimic Human Intelligence?

Machine learning is used in artificial intelligence to replicate human intellect.

- The computer must learn how to react to certain activities, so it creates a propensity model using algorithms and historical data.

- After that, propensity models will begin to make predictions (like scoring leads or something).

The origins of AI may be traced back to questions about how far a computer can partly or entirely replace humans in work performance (Weber and Schütte 2019).

As a result, AI is defined in terms of human intelligence in marketing literature.

- Researchers, for example, describe AI as a system that demonstrates features of human intelligence (Huang and Rust 2018), mimics intelligent human behavior (Syam and Sharma 2018), or mimics nonbiological intelligence (Syam and Sharma 2018). (Tegmark 2017).

- According to McCarthy (2007), AI is "the science and engineering of creating intelligent devices, particularly clever computer programs."

- AI is akin to the goal of utilizing computers to comprehend human intellect, but it does not have to be limited to physiologically observable ways."

- Because of these definitions, AI is reliant on human intellect (Bock et al. 2020).

Can AI Supersede Human Intelligence?

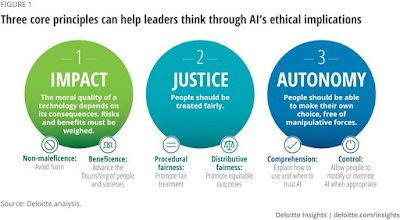

Because of constrained rationality deriving from limited knowledge, cognitive capacities, and short time to make judgments, people sometimes engage in actions that may not lead to the optimal end outcome (Kahneman and Tversky 1979). (Dawid 1999).

According to some scientists, robots may demonstrate human-like intelligence in two ways: behaving intelligently (performing activities such as remembering, learning, reasoning, perceiving, and problem-solving toward a goal-directed action) and rationality (doing the "correct thing" under ambiguity) (Paschen et al. 2019).

AI can discern inclinations, intentions, and patterns that are beyond the intellectual capabilities of a human brain using Big Data and deep learning.

- The human brain can only evaluate and draw conclusions from limited data; robots, on the other hand, can comprehend billions of data points.

- AI has progressed through "four intelligence stages" (i.e., from analytical to emotional) to gain sophisticated skills such as reasoning, planning, conceptual learning, creativity, common sense, cross-domain thinking, and even self-awareness (Huang and Rust 2018). (Bock et al. 2020).

- In this case, Kaplan and Haenlein's (2019) definition of AI, which defines AI as "a system's capacity to properly read external input, to learn from such data, and to employ those learnings to fulfill specified objectives and tasks via flexible adaptation," seems more relevant.

HOW IS ARTIFICIAL INTELLIGENCE USED IN E-COMMERCE BUSINESS PROCESSES?

Apart from product suggestions, online merchants are using artificial intelligence in the Ecommerce business to provide chatbot services, analyze client feedback, and provide tailored services to online customers.

Companies participating in e-commerce undertake a variety of business procedures such as marketing, purchasing, selling, and servicing items and services .

- To carry out marketing, discovery, transaction processing, and product and customer services, these enterprises totally rely on e-commerce apps and internet-based technology.

- E-commerce websites use the Internet to conduct interactive marketing, ordering, payment, and customer service activities.

E-commerce also includes processes related to e-business, such as suppliers and customers using extranets to access inventory databases (transaction processing), sales and customer service representatives using the internet to access customer relationship management (CRM) systems (service and support), and customers participating in product development via email and social media (marketing/discovery) (O'Brien and Marakas 2011).

- AI, according to researchers, may boost corporate performance since AI solutions are quicker, cheaper, and less prone to human errors (Huang and Rust 2018; Canhoto and Clear 2020).

- As a result, we will attempt to comprehend how AI contributes to different e-commerce business operations in the following parts.

How Is Artificial Intelligence Used In Marketing?

- Marketers may use AI to better understand their consumers and enhance their experiences. Marketers may use AI-powered marketing to generate a predictive customer analysis and construct a more focused and individually designed customer journey, effectively increasing ROI on each customer encounter.

- AI is often utilized in marketing campaigns when speed is critical. AI systems learn how to effectively engage with consumers based on data and customer profiles, then give them personalised messages at the perfect moment without the need for marketing staff involvement, guaranteeing optimal productivity.

How is Artificial Intelligence Used In Market Research?

Marketing Businesses exclusively employed information technology for data processing and transmission until the third industrial revolution.

The fourth industrial revolution, on the other hand, will enable computers to make suitable and trustworthy judgments (Syam and Sharma 2018).

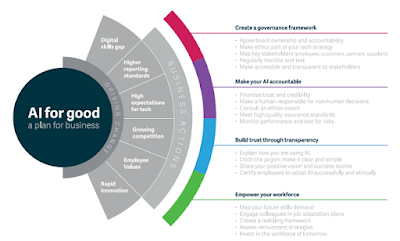

Large organizations that fail to use cutting-edge technologies such as AI, according to researchers, will be washed away in the face of competition (Stone et al. 2020).

The basic goal of market research is to identify the most accurate client groupings.

To provide items to consumers via an acceptable distribution plan, selected segments are targeted with appropriate products, supplied at appropriate pricing, and supported with reasonable promotional and communication tactics (Syam and Sharma 2018).

Previously, segmentation was based on either "classic" approaches (such as cluster analysis (for clustering) or "more contemporary" techniques (such as hidden Markov models, support vector machines, artificial neural networks (ANN), classification and regression trees (CART), and genetic algorithms).

The new century's machine learning capabilities have improved the efficiency of these segmenting algorithms.

For example, AI-enabled ANN models may help B2B e-commerce enterprises solve marketing difficulties (Wilson and Bettis-outland 2020).

Marketers have access to vast amounts of data, which they may use to segment large amounts of unstructured data (Big Data) using unsupervised neural networks (Hruschka and Natter 1999).

Profitability or customer lifetime value (CLV) segments may now be found using decision trees driven by machine learning (Florez-lopez and Ramon-jeronimo 2009).

AI can sift through a massive volume of written and unwritten user-generated information on social media sites to uncover user requirements, preferences, attitudes, and behaviors.

For instance, IBM Watson AI can recognize psychographic features represented in text to provide marketers with important insights for new product development or innovation (IBM 2020).

To understand the user experience, AI can discover themes and patterns in users' postings, and the knowledge may be utilized to create strategies to improve the user experience.

It also aids in the collection, sorting, and analysis of external market information, or intelligence about external market factors and players that may impact consumer preferences and actions.

For example, AI systems driven by machine learning and natural language processing algorithms can detect false news from a large number of blog posts, social media posts, and other sources (Berthon and Pitt 2018).

Similarly, competitive intelligence may be derived from unstructured data (news, social media, website content, etc.) by recognizing themes or keywords. (2019, Paschen et al.)

Artificial Intelligence For Market Stimulation.

Market stimulation happens at the same time as marketing, which is defined as "the activity, collection of institutions, and procedures for producing, conveying, delivering, and exchanging offers that have value for consumers, clients, partners, and society as a whole" (American Marketing Association 2017).

It usually consists of four distinct but interconnected components:

- product,

- pricing,

- location, and

- marketing (McCarthy 1960).

However, the concept of marketing has evolved to the point where technological adaptation is required, and the impact of AI on each marketing mix component, namely, product (hyper-personalization, new product development, automatic recommendations, etc. ), price (price management, personalized dynamic pricing, etc. ), place (convenience, speed, simple sales process, 24/7 chatbot support, etc. ), and promotion (personalized communication, unique user identifiers, etc). (Jarek et al. 2019; Dumitriu and Popescu 2020).

The first three AI categories (picture recognition, text recognition, decision-making, speech recognition, and autonomous robots and vehicles) are heavily employed in marketing.

Because of the expense and unpredictability associated with AI technologies, marketers are hesitant to deploy them.

Large IT giants such as Google, Amazon, Microsoft, and Apple, on the other hand, are investing in AI areas such as speech recognition and self-driving robots and vehicles (Jarek et al. 2019).

As a result, AI has had a significant impact on modern marketing practices; for example, routine, time-consuming, and repeatable jobs (data collection, analysis, image search, processing) have been automated; strategic and creative activities to build a competitive advantage are emphasized; and business enterprises are designing innovative ways to deliver customer value.

It has also created a marketing environment in which companies that provide AI solutions are in high demand (Jarek et al. 2019).

How Is Artificial Intelligence Being Used For Transaction Processing?

How Is Artificial Intelligence Being Used For Terms Negotiation?

Negotiation is an art (rather than a science) of getting what you want via bargaining or a one-on-one contact.

In an e-commerce transaction, the majority of interactions are electronic, such as through email, social media, text chat, or phone.

AI may be used as a functional science in this situation to offer a competitive edge in the negotiating process (Mckendrick 2019).

- Negotiation is similar to a persuasive debate in which a proponent and an opponent iteratively provide a sequence of reasons, and both give counter arguments to disprove the other's point (Huang and Lin 2005).

- Scientists are teaching their chatbots and virtual assistants to anticipate many steps ahead and analyze how saying certain things may influence the result of the negotiation based on the components of negotiations (i.e., negotiation set, protocol, collection of tactics, and rule of deal) (Reynolds 2017).

- These AI negotiation bots may work on behalf of an e-retailer 24 hours a day, 7 days a week to seek consumers and automatically negotiate the best terms based on the administrator's requirements or even market circumstances (Krasadakis 2017).

It is very difficult for internet retailers to recruit, service, and keep consumers by communicating via graphical user interfaces (websites).

Customers, meanwhile, have no opportunity to bargain for a better value (Huang and Lin 2005).

Natural language interfaces for human–computer interaction, on the other hand, may successfully tackle this challenge (Jusoh 2018).

E-commerce is a fast-paced sector where prices fluctuate often.

E-commerce enterprises are utilizing AI to dynamically price their products and services, modifying prices in real time based on market circumstances (demand–supply) (Kephart et al. 2000).

How Is Artificial Intelligence Being Used For Order Prioritization and Order Selection?

Alibaba has released Fashion AI technology in order to enhance sales.

- Customers may submit photos of products they want to purchase to the Taobao e-commerce site, and the system will automatically look for comparable items for sale (Simon 2019).

- Similarly, AI may gather real-time data by following a customer's online behavior on their or a competitor's website to determine whether to provide a price reduction or search the company's database to see whether earlier product suggestions were rejected or accepted (Canhoto and Clear 2020).

Resupply optimization is another key AI application.

- By calculating the best time and amount for placing an order with the central warehouse and suppliers, AI can cut inventory expenses (Stone et al. 2020).

- This may help with a variety of concerns, including reducing the quantity or amount of unsold items, optimizing warehouse shelf space, and increasing cash flow.

- Individual order and delivery (personalization) may be optimized using AI algorithms (Zanker et al. 2019), and complicated jobs like same-day delivery can be simplified (Kawa et al. 2018).

How Is Artificial Intelligence Being Used For Order Receipt?

AI can use predictive systems to evaluate prospects (customers) based on their propensity to buy (high-quality leads) (Järvinen and Taiminen 2016), use emotional AI to answer common questions and overcome customer objections (Paschen et al. 2019), and automate and speed up the checkout process (Paschen et al. 2019).

Amazon and other leading e-commerce businesses have launched language-assisted ordering (Amazon Echo) (Holmqvist et al. 2017).

For sales forecasting (Dwivedi et al. 2019), shop assortments (Shankar 2018), and customizing searches, suggestions, pricing, and promotions, complex AI models are deployed (Montgomery and Smith 2009).

On a range of devices, AI can automate service interactions and provide individualized and relevant information (Bock et al. 2020).

How Is Artificial Intelligence Being Used For Order Billing/Payment Management?

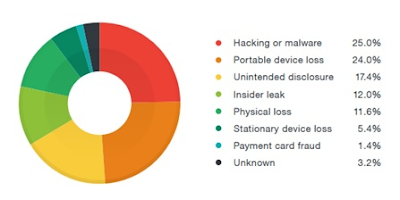

Invoicing, payment optimization, and fraud detection are three areas where AI can assist e-commerce businesses (Mejia 2019).

The most difficult and time-consuming jobs in company are billing and invoice processing.

AI applications, on the other hand, may assist firms in matching client invoices to payments received (Dwivedi et al. 2019).

- Manual and semi-automated billing procedures cannot manage a large number of customer payments, but AI-enabled billing systems can handle a large volume of data and eliminate abnormalities, inconsistencies, and discrepancies in the bills (Bajpai 2020).

- One of the most convenient methods to make a payment using a computer and the internet is to conduct an online transaction.

- Customers may use their e-wallets to make purchases (Kalia 2016; Kalia et al. 2017a).

- Regardless of the security precautions in place, every online transaction has the risk of fraud (Papadopoulos and Brooks 2011).

By activating the autonomous decision-oriented and advanced fraud detection system, AI-powered billing software may prevent fraud from happening in the first place (Khattri and Singh 2018).

Fraudster, for example, detects payment fraud using past data connected to transactions, billing, shipping addresses, and IP connection (Canhoto and Clear 2020).

How Is Artificial Intelligence Being Used For Support and Service?

How Is Artificial Intelligence Being Used For Order Fulfillment/Order Scheduling?

Order scheduling and fulfillment include responsibilities such as picking up or delivering items and services in the correct amount and quality at the proper time and location (Weber and Schütte 2019).

The e-commerce sector need exceptionally efficient fulfillment operations due to erratic order patterns, limited order processing time, and short-term delivery schedules (Leung et al. 2018).

Here, AI systems may actively monitor and improve these operations by taking into account elements such as order demand and product characteristics to automate the ideal logistics plan (Lam et al. 2015; Paschen et al. 2019).

- According to researchers, sustainable supply chains and reverse logistics are the most important subjects for current and future AI research (Dhamija and Bag 2019).

- As a result, top e-commerce corporations such as Amazon.com are investing in robots and space-age fulfillment technology (order delivery through drones) (Dirican 2015).

Reverse logistics, in addition to fulfillment, is a difficulty.

- Products are often returned without their original packaging, as well as seasonal collections, and product similarities may make the procedure more difficult.

- These returns may be compared to catalogue photographs to categorize the goods using AI-powered automated image recognition (Kumar et al. 2014).

How Is Artificial Intelligence Being Used For Support and Customer Service?

Service quality may be a game changer in the e-commerce market (Kalia et al. 2016, 2017b; Kalia 2017a, b).

- Customer service and support may benefit from AI by boosting satisfaction, building relationships, customizing help, and providing recovery in the event of service failures.

- It's known as "service AI," and it's defined as "the configuration of technology to provide value in the internal and external service environments through flexible adaptation enabled by sensing, learning, decision-making, and actions," according to the researchers (Bock et al. 2020).

- As a result, service AI is more than just making preprogrammed decisions; it also has the ability to learn (Makridakis 2017).

Because AI-based services are more dependable, of high quality, consistent, continuously available (24/7), and less susceptible to human errors arising from fatigue and bounded rationality, they can affect customer satisfaction (Huang and Rust 2018).

Similarly, it is simpler for e-commerce businesses to engage in marketing operations in order to build, grow, and retain client connections (Lo and Campos 2018).

- Virtual assistants, for example, can send out notifications to millions of customers, analyze their purchases, returns, and loyalty card information, and deliver services that are beyond human capability.

- Using structured and unstructured data related to their psychographic, demographic, and webographic characteristics, as well as online buying behavior (frequency, recency, type, and size of past purchases), AI-enabled systems can create comprehensive profiles of current or potential customers, which can then be processed using machine learning and predictive algorithms to strengthen customer relationship efforts and prospecting of potential customers (Lo and Campos 2018; Paschen et al. 2019).

By learning to talk in different languages, recognizing consumers' emotional states, and retrieving information for them, AI can give a high-quality tailored experience to customers.

- A disgruntled consumer might disseminate unfavorable information.

- Firms, on the other hand, may use the high-quality consumer feedback to design service recovery plans (Lo and Campos 2018).

Ending Remarks.

In many segments/processes of e-commerce business companies, AI plays a significant and important role.

The role of AI in e-commerce business processes such as market research, market stimulation, terms negotiation, order receipt, order selection and priority, order billing/payment management, order scheduling/fulfillment delivery, and customer service and support has been detailed in order to achieve this goal.

We discovered that AI has a wide range of potential applications in marketing, transaction processing, and e-commerce service and support.

At the present rate of technological advancement, AI will go from a data and information processing tool (weak AI) to a self-contained system capable of making human judgments (strong AI).

~ Jai Krishna Ponnappan

Find Jai on Twitter | LinkedIn | Instagram

You may also want to read more about Artificial Intelligence here.

FREQUENTLY ASKED QUESTIONS:

How is artificial intelligence (AI) affecting the e-commerce industry?

eCommerce sites may build customized online experiences and propose items that are tailored to each buyer using AI.

Companies like Amazon and Netflix, who were early users of AI, have had success with personalized marketing utilizing the technology.

What are some instances of artificial intelligence in e-commerce applications?

The Top AI Applications in E-Commerce and Retail Purchasing Recommendations Shopping assistants that respond to voice commands.

E-commerce purchasing experiences that are tailored to you. Warehouse "pickers" using artificial intelligence For ads, image and video recognition is used.

Methods of payment based on facial recognition.

What are the advantages of artificial intelligence in the field of e-commerce?

- Voice Commerce

- Virtual Assistants

- Personalization in e-commerce.

- eCommerce Automation using Searchandising (Smart Search).

- Prospective Customers are being retargeted. CRM allows for a more efficient sales process.

Which is the most popular/trending AI application in eCommerce?

One of the most promising applications of AI technology in eCommerce is assisting customers in finding items more quickly.

It can be done using chatbots or by making textual search more meaningful, but visual search with picture recognition is one of the most promising technologies.

In e-commerce, how does AI affect consumer satisfaction?

Customer support for ecommerce shops is improved by artificial intelligence in the form of chatbots.

Chatbots answer quickly and may deliver replies that are tailored to the individual consumer.

As a result, the user experience in the shop is smooth.

What influence does Artificial Intelligence have in the internet business world?

By automating and optimizing common procedures and activities, you may save time and money.

Boost productivity and efficiency in your operations. create quicker business choices based on cognitive technology outputs

How can artificial intelligence (AI) enhance the consumer experience?

You can use AI to boost consumer engagement, foster brand loyalty, and increase retention.

While technology isn't a substitute for real humans, it may assist enhance productivity and remove low-hanging fruit off your customer support employees' plates, such as addressing commonly requested inquiries.

What role does artificial intelligence play in increasing client loyalty?

The most significant advantage of AI is that it enables organizations to process massive volumes of data in real time.

Brands can take use of its capacity to make good use of this data for loyalty marketing and to favorably influence consumer behavior.

What impact does AI have on how companies and organizations connect with their customers?

Conversational AI has made the communication space more fluid for both customers and companies, providing quick results for customers and making it easier for enterprises to pay attention to everyone who needs it.

What impact will artificial intelligence have on the corporate world in the future?

Artificial intelligence enables company owners to provide their clients a more tailored experience.

AI is much more efficient at analyzing large amounts of data.

It can swiftly spot patterns in data, such as previous purchase history, preferences, credit ratings, and other similar threads.

REFERENCES

Aggarwal A (2018) Resurgence of AI during 1983–2010. https://www.kdnuggets.com/2018/02/resurgence-ai-1983-2010.html. Accessed 16 May 2020.

American Marketing Association (2017) Definitions of marketing. https://www.ama.org/the-definition-of-marketing-what-is-marketing/. Accessed 17 May 2020.

Bajpai K (2020) Artificial intelligence in billing and invoice processing: The future is here! https://www.elorus.com/blog/artificial-intelligence-billing-invoice-processing/. Accessed 16 May 2020.

Berthon PR, Pitt LF (2018) Brands, truthiness and post-fact: Managing brands in a post-rational world. J Macromarketing 38:218–227.

Bock DE, Wolter JS, Ferrell OC (2020) Artificial intelligence: Disrupting what we know about services. J Serv Mark. doi: 10.1108/JSM-01-2019-0047.

Campbell C, Sands S, Ferraro C, et al. (2020) From data to action: How marketers can leverage AI. Bus Horiz 63:227–243. doi: 10.1016/j.bushor.2019.12.002.

Canhoto AI, Clear F (2020) Artificial intelligence and machine learning as business tools: A framework for diagnosing value destruction potential. Bus Horiz 63:183–193 doi: 10.1016/j.bushor.2019.11.003.

Dawid H (1999) Bounded rationality and artificial intelligence. In: Adaptive Learning by Genetic Algorithms. Springer-Verlag, Berlin, Heidelberg. doi: 10.1007/978-3-642-18142-9_2.

Dhamija P, Bag S (2019) Role of artificial intelligence in operations environment: A review and bibliometric analysis. TQM J. doi: 10.1108/TQM-10-2019-0243.

Dirican C (2015) The impacts of robotics, artificial intelligence on business and economics. Procedia Soc Behav Sci 195:564–573. doi: 10.1016/j.sbspro.2015.06.134.

Dumitriu D, Popescu MA (2020) Artificial Intelligence solutions for digital marketing. Procedia Manuf 46:630–636 doi: 10.1016/j.promfg.2020.03.090.

Dwivedi YK, Hughes L, Ismagilova E, et al. (2019) Artificial intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int J Inf Manage 101994. doi: 10.1016/j.ijinfomgt.2019.08.002.

Florez-lopez R, Ramon-jeronimo JM (2009) Marketing segmentation through machine learning models: An approach based on customer relationship management and customer profitability accounting. Soc Sci Comput Rev 27:96–117.

Frey CB, Osborne MA (2017) The future of employment: How susceptible are jobs to computerisation? Technol Forecast Soc Change 114:254–280. doi: 10.1016/j.techfore.2016.08.019.

Holmqvist J, Van Vaerenbergh Y, Grönroos C (2017). Language use in services: Recent advances and directions for future research. J Bus Res 72:114–118. doi: 10.1016/j.jbusres.2016.10.005.

Hruschka H, Natter M (1999) Comparing performance of feedforward neural nets and K-means for cluster-based market segmentation. Eur J Oper Res 114:346–353.

Huang M, Rust RT (2018) Artificial intelligence in service. J Serv Res 21:155–172. doi: 10.1177/1094670517752459.

Huang S, Lin F (2005) Designing intelligent sales-agent for online selling. In: Proceedings of the 7th International Conference on Electronic Commerce. Association for Computing Machinery, New York, NY/Xi’an, China, pp. 279–286.

IBM (2020) Getting started with natural language understanding. https://cloud.ibm.com/docs/services/natural-language-understanding?topic=natural-language-understanding-getting-started#analyze-phrase. Accessed 17 May 2020.

Jarek K, Kozminskiego AL, Mazurek G (2019) Marketing and artificial intelligence. Cent Eur Bus Rev 8:46–55 doi: 10.18267/j.cebr.213.

Järvinen J, Taiminen H (2016) Harnessing marketing automation for B2B content marketing.

Ind Mark Manag 54:164–175. doi: 10.1016/j.indmarman.2015.07.002.

Jusoh S (2018) Intelligent conversational agent for online sales. In: 10th International Conference on Electronics, Computers and Artificial Intelligence (ECAI). IEEE, Iasi, Romania, pp. 1–4.

Kahneman D, Tversky A (1979) Prospect theory: An analysis of decision under risk. Econometrica 47:263–292.

Kalia P (2016) Tsunamic e-commerce in India: The third wave. Glob Anal 5:47–49.

Kalia P (2017a) Service quality scales in online retail: Methodological issues. Int J Oper Prod Manag 37:630–663. doi: 10.1108/IJOPM-03-2015-0133.

Kalia P (2017b) Webographics and perceived service quality: An Indian e-retail context. Int JServ Econ Manag 8:152–168. doi: 10.1504/IJSEM.2017.10012733.

Kalia P, Arora R, Kumalo S (2016) E-service quality, consumer satisfaction and future purchase intentions in e-retail. e-Service J 10:24–41. doi: 10.2979/eservicej.10.1.02.

Kalia P, Kaur N, Singh T (2017a) E-commerce in India: Evolution and revolution of online

retail. In: Khosrow-Pour M (ed) Mobile Commerce: Concepts, Methodologies, Tools, and Applications. IGI Global, Hershey, PA, pp. 736–758.

Kalia P, Law P, Arora R (2017b) Determining impact of demographics on perceived service quality in online retail. In: Khosrow-Pour M (ed) Encyclopedia of Information Science and Technology, 4th edn. IGI Global, Hershey, PA, pp. 2882–2896.

Kaplan A, Haenlein M (2019) Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus Horiz 62:15–25. doi: 10.1016/j.bushor.2018.08.004.

Kawa A, Pieranski B, Zdrenka W (2018) Dynamic configuration of same-day delivery in e-commerce. In: Sieminski A, Kozierkiewicz A, Nunez M, Ha QT (eds) Modern Approaches for Intelligent Information and Database Systems. Springer, Berlin, pp. 305–315.

Kephart JO, Hanson JE, Greenwald AR (2000) Dynamic pricing by software agents. ComputNetw 32:731–752.

Khattri V, Singh DK (2018) Parameters of automated fraud detection techniques during online transactions. J Financ Crime 25:702–720. doi: 10.1108/JFC-03-2017-0024.

Krasadakis G (2017) AI buyer/seller negotiation agents. https://medium.com/innovation-machine/artificial-intelligence-negotiation-agents-49d666cd9952. Accessed 16 May 2020.

Kumar DT, Soleimani H, Kannan G (2014) Forecasting return products in an integrated forward/reverse supply chain utilizing an ANFIS. Int J Appl Math Comput Sci 24:669–682. doi: 10.2478/amcs-2014-0049.

Lam HY, Choy KL, Ho GTS, et al. (2015) A knowledge-based logistics operations planning system for mitigating risk in warehouse order fulfillment. Int J Prod Econ 170:763–779. doi: 10.1016/j.ijpe.2015.01.005.

Legg S, Hutter M (2006) A collection of definitions of intelligence. In: Advances in Artificial General Intelligence: Concepts, Architectures and Algorithms. IOS Press, Amsterdam, pp. 17–24.

Leung KH, Choy KL, Siu PKY, et al. (2018) A B2C e-commerce intelligent system for re-engineering the e-order fulfilment process. Expert Syst Appl 91:386–401. doi: 10.1016/j.eswa.2017.09.026.

Lo FY, Campos N (2018) Blending Internet-of-Things (IoT) solutions into relationship marketing strategies. Technol Forecast Soc Change 137:10–18. doi: 10.1016/j.techfore.2018.09.029.

Makridakis S (2017) The forthcoming artificial intelligence (AI) revolution: Its impact on society and firms. Futures 90:46–60. doi: 10.1016/j.futures.2017.03.006.

McCarthy EJ (1960) Basic Marketing: A Managerial Approach. Richard D. Irvin, Inc., Homewod, IL.

McCarthy J (2007) What is artificial intelligence? https://www-formal.stanford.edu/jmc/whatisai/node1.html. Accessed 17 May 2020.

Mckendrick J (2019) Now, AI can give you an edge … in negotiations. https://www.rtinsights.com/now-ai-can-give-you-an-edge-in-negotiations. Accessed 16 May 2020.

Mejia N (2019) Artificial intelligence in payment processing – Current applications. https://emerj.com/ai-sector-overviews/artificial-intelligence-in-payment-processing-current-applications/. Accessed 16 May 2020.

Merriam-Webster.com (2020) Definition of intelligence. https://www.merriam-webster.com/dictionary/intelligence. Accessed 17 May 2020.

Montgomery AL, Smith MD (2009) Prospects for personalization on the Internet. J Interact Mark 23:130–137. doi: 10.1016/j.intmar.2009.02.001.

O’Brien JA, Marakas GM (2011) Management Information System, 10th edn. McGraw-Hill/ Irwin, New York, NY.

Ostrom AL, Fotheringham D, Bitner MJ (2018) Customer acceptance of AI in service encounters: Understanding antecedents and consequences. In: Maglio PP, Kieliszewski CA, Spohrer JC, et al. (eds) Handbook of Service Science. Springer Nature, Cham, pp. 77–103.

Papadopoulos A, Brooks G (2011) The investigation of credit card fraud in Cyprus: reviewing police “effectiveness.” J Financ Crime 18:222–234. doi: 10.1108/13590791111147442.

Paschen J, Kietzmann J, Kietzmann TC (2019) Artificial intelligence (AI) and its implications for market knowledge in B2B marketing. J Bus Ind Mark 34:1410–1419. doi: 10.1108/JBIM-10-2018-0295.

Reynolds M (2017) Chatbots learn how to negotiate and drive a hard bargain. NewScientist 234:7. doi: 10.1016/S0262-4079(17)31142-9.

Shankar V (2018) How artificial intelligence (AI) is reshaping retailing. J Retail 94:vi–xi. doi: 10.1016/S0022-4359(18)30076-9.

Simon JP (2019) Artificial intelligence: Scope, players, markets and geography. Digit Policy Regul Gov 21:208–237. doi: 10.1108/DPRG-08-2018-0039.

Sonia N, Sharma EK, Singh N, Kapoor A (2020) Artificial intelligence in market business: From research and innovation to deployment market. Procedia Comput Sci 167:2200–2210. doi: 10.1016/j.procs.2020.03.272.

Stone M, Aravopoulou E, Ekinci Y, et al. (2020) Artificial intelligence (AI) in strategic marketing decision- making: A research agenda. Bottom Line. doi: 10.1108/BL-03-2020-0022.

Syam N, Sharma A (2018) Waiting for a sales renaissance in the fourth industrial revolution: Machine learning and artificial intelligence in sales research and practice. Ind Mark Manag. 69:135–146. doi: 10.1016/j.indmarman.2017.12.019.

Tegmark M (2017) Life 3.0: Being Human in the Age of Artificial Intelligence. Alfred A. Knopf, New York, NY.

Weber FD, Schütte R (2019) State-of-the-art and adoption of artificial intelligence in retailing. Digit Policy Regul Gov 21:264–279. doi: 10.1108/DPRG-09-2018-0050.

Wilson RD, Bettis-outland H (2020) Can artificial neural network models be used to improve the analysis of B2B marketing research data? J Bus Ind Mark 35:495–507. doi: 10.1108/JBIM-01-2019-0060.

Zanker M, Rook L, Jannach D (2019) Measuring the impact of online personalisation: Past, present and future. J Hum Comput Stud 131:160–168. doi: 10.1016/j.ijhcs.2019.06.006.